ENTERPRISE AI FOUNDATION

Thorben delivers a secure, federated platform to deploy, control, and manage AI comprehensively across your entire organization, project, and/or application. Our enterprise-grade AI solution transforms AI chaos into strategic advantage, providing efficiency, unified governance, security, and operational excellence.

AI HUB to connect siloed data and systems

- Organization Specific Data

- Guardrails, Role Based Access Controls, Data Security

- Access to multiple LLM Models (choice)

- Enterprise Zero Trust Architecture principles

- GovCloud (IL2, IL4, IL5), IL6, IC, Commercial Cloud

- ATO Ready

ORGANIZATION SPECIFIC AI

We Build, Implement, and Train

- YOUR PERSONAS

- YOUR DATA LIBRARIES (Secure RAG)

- YOUR WORKFLOWS (Custom AI Agents)

- YOUR SOLUTION

PERFORMANCE FOCUSED AI

Improves

- USER PRODUCTIVITY

- USE OF RESOURCES

- DEPLOYABLE CAPABILITIES

- AI USABILITY

When, Where and How Users Need It

SECURE ORGANIZATIONAL FOUNDATION AI (SOFA)

Thorben developed its Secure Organizational Foundation AI (SOFA) to help organizations establish their own AI capability based on their own data and business processes. Thorben's SOFA solution serves as your own innovation lab and AI foundation to incrementally add AI capabilities to your organization based on how you actually operate rather than someone else's idea of how you should operate.

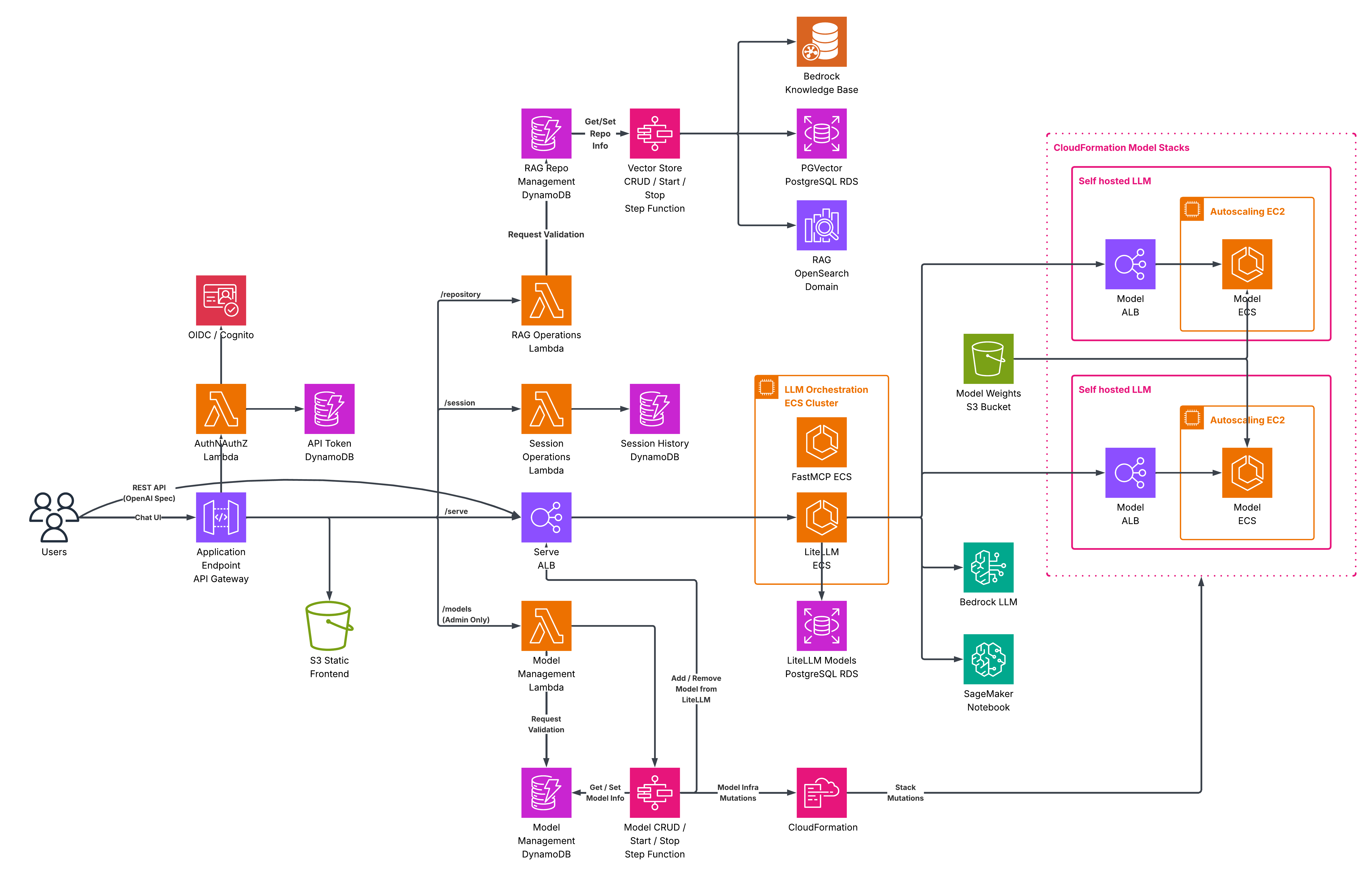

Thorben has developed our SOFA solution built leveraging AWS BEDROCK, AWS LISA, Open source LiteLLM operating within AWS to accelerate the deployment and standardize the operationalization of Generative AI applications and Machine Learning capabilities. The SOFA solution is rooted in AWS Well-Architected best practices and Zero Trust Architecture principles. The SOFA offers customers a proven ATO-ready platform to repeatedly deploy customer-specific AI capabilities (AI Agents, RAG, Chatbot, Agentic AI) within the enterprise at the corporate, program, or system level.

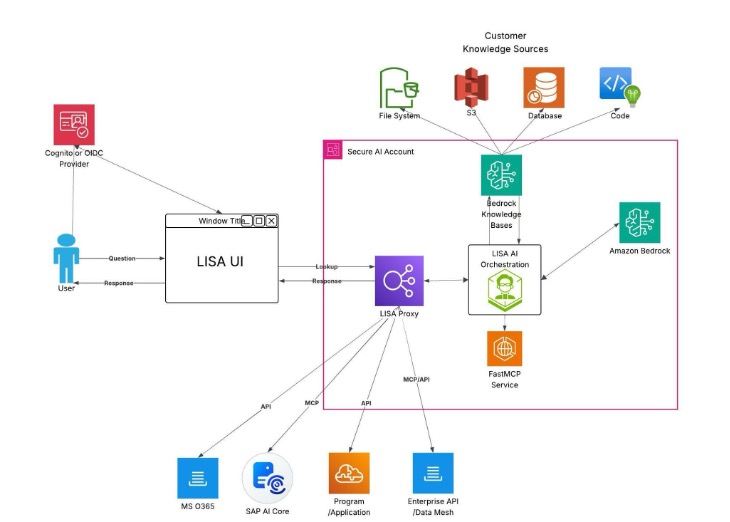

The SOFA solution MCP and API Hub capabilities can serve as a central AI hub and bridge AI Agents across the enterprise. Thorben combines established and proven AWS services to deliver AI Capabilities using this architecture.

Thorben built our Secure Organizational Foundation AI with government security requirements in mind.

Capabilities

Large Language Models (LLM) Access

Definition

Predicts answers based on training data and immediate context, generating intelligent responses through massive datasets and sophisticated prompting.

Thorben Implementation

Baseline provides Amazon Bedrock Foundation Models with comprehensive LISA chatbot AI user interface. LISA orchestration layer seamlessly integrates additional custom LLM, AWS Bedrock and/or 100+ OpenAI compatible LLM

Corporate Retrieval-Augmented Generation (RAG)

Definition

Combines LLM capabilities with knowledge base connections. Retrieves relevant information from document stores or systems, augmenting prompts with contextual data.

Thorben Implementation

Robust RAG capabilities with role-based access controls (via SPARK), administrative UI, and security for maintaining RAG content integrity and relevance.

OpenAI Application Programming Interface (API)

Definition

Enables systems to interact with RAG LLMs for predefined tasks via structured conversation. Detects intent, calls external tools or APIs, and manages responses for generative or agentic AI workflows.

Thorben Implementation

Foundation includes OpenAI API layer via liteLLM to provide API integration, enabling sophisticated system-to-system AI communications

Model Context Protocol (MCP) Integration

Definition

AI protocol for process creating bidirectional conversations, allowing AI agents to autonomously discover, understand, and securely interact with tools and data sources.

Thorben Implementation

Foundation integrates LiteLLM and FastMCP to provide MCP capabilities and Model Orchestration enabling agentic AI processes with comprehensive security and control mechanisms.

Key Features

Enterprise-Grade Standards

Unified AI solution providing consistent governance, security, and operational standards across all deployments

Repeatable Deployment

Customer definable standardized processes enable rapid, secure deployment of AI use cases with compliance and best practices

Flexible Modular Access

Seamless integration with endless LLM models, allowing organizations to select optimal solutions for each use case

Federated AI Orchestration

A centralized hub coordinates AI activities across enterprise, enabling efficient resource sharing and collaboration

Security Guardrails

Comprehensive data access controls ensure compliance while maintaining operational flexibility and performance

Unified Platform Management

SOFA provides admin UI to deploy features, manage users, manage RAGs, AI APIs, and MCP processes from a single UI

Implementation Strategy

Thorben implements SOFA within Client AWS Accounts to address AI Solutions areas clients select. Thorben's SOFA manages AI capabilities in a unified, secure, and scalable manner for enterprises on an ongoing basis. Benefits include avoiding redundant data ingestion through creating customer specific RAG, sharing AI across fragmented AI silos via OpenAI standards and AWS FinOps costs management to avoid unpredictable and ever escalating SaaS charges without regard to benefits.

Thorben has a defined and proven AI implementation process. During this process Thorben works with clients to identify their goals and requirements, their biggest challenges and pain points, their current IT landscape, their current data management approach, their integration requirements, their performance needs and project scope. Thorben provides performance focused AI to improve user productivity, resource usage and deployable capabilities.

Thorben's SOFA provides a full range of AI capabilities including Chatbot functions. Thorben offers to provide you with a demonstration so we can start building your Enterprise AI foundation together.

Core Technology Stack

AWS LISA Chat UI

Provides comprehensive UI and RAG management capabilities for enterprise AI interactions

Retrieval-Augmented Generation (RAG)

Amazon Bedrock Knowledge Bases for efficient retrieval of relevant external data to enhance model responses

Amazon Bedrock for Model Choice

Powers access to diverse, state-of-the-art Foundational Models

FastMCP

Enables advanced MCP orchestration and agentic AI workflows

AWS LISA Serve with LiteLLM

Provides orchestration capability for inference orchestration and management

Security & Compliance Framework

FedRAMP High Services

All components meet stringent federal security requirements for high-impact data

AWS Well-Architected

Built on AWS Well-Architected Framework ensuring operational excellence, security, reliability, performance, and cost optimization

Zero Trust Architecture

Implements zero trust security principles with continuous verification and least-privilege access controls

Technical Architecture

AI is proliferating across enterprises and solutions, creating integration issues, new FinOps cost management considerations and security vulnerabilities. Thorben delivers a secure, federated platform to deploy, control, and manage AI comprehensively across entire organizations. The SOFA is a scalable architecture built and deployed using a collection of AWS FedRamp approved Services to provide an ATO-ready AI solution.

The SOFA Solution can integrate with AWS Operations and leverage standard Day 2 Operational services to provide ongoing management, monitoring, and optimization of SOFA after deployment.

Model Access

- Bring your own Foundation Models, Leverage AWS Bedrock, or connect to 3rd Party LLM Models

- Access open-source and proprietary generative AI models through SOFA Lite LLM OpenAI allows plug and play capabilities, ability to scale, ability to leverage best Foundation Model per process

- Plug-in-play model provide for flexibility and future technology adoption

Retrieval Augmented Generation (RAG)

- Ability to connect to various organization specific data sources (MS Teams, S3, Sharepoint, Windows share, etc) and create customer specific RAGs knowledge sources

- Vector Solution options such as Amazon Bedrock, Amazon OpenSearch and/or PGVector provide capability flexibility and cost options

- Data tagging and role-based access control implementation options

AI ChatBot User Interface

- Preconfigured Chatbot User Interface (UI) to query Custom RAG using LLM of choice

- Tracks Chat Sessions

- Identity Access Management (IAM) and Single Sign On (SSO) capabilities to control access to UI via customer LDAP or IAM

Administration

- Ability to configure multiple Foundation Models and select LLM Models per process

- Ability to maintain document repositories and custom RAGs Knowledge bases with Need-to-Know access controls

- Ability to configure API to target systems for data sharing

- IaC for Solution Maintenance

Observability

- Integrate with the customer's existing AWS Observability framework or deploy AWS Observability as an additional service

AI FOUNDATION CAPABILITIES

Thorben's SOFA provides foundational capabilities to protect privacy, implement cybersecurity best practices and protect national security while using AI on an ongoing basis.

ENTERPRISE GRADE CENTRALIZATION

Unified AI solution provides consistent governance, security, and operational standards across all deployments. AWS cost model so no subscription or licensing fees are required because AWS Services costs are based on service usage. AWS Software development teams back AWS services with ongoing improvements.

REPEATABLE SECURE DEPLOYMENT

Standardized processes enable rapid, secure deployment of AI use cases with built-in compliance and best practices including support for Anthropic's Model Context Protocol (MCP) and APIs.

FLEXIBLE MODULAR ACCESS

Seamless integration using OpenAI with Large Language Models (LLM), allows organizations to select optimal solutions for each use case. The chat user interface (UI) supports model management, model prompting, document summarization, chat session management, prompt libraries.

FEDERATED AI ORCHESTRATION

Centralized hub can coordinate AI activities across the enterprise, enabling efficient resource sharing and collaboration. SOFA centralizes and standardizes unique API calls to third-party model providers via LiteLLM. LISA standardizes the AI API calls into the OpenAI format automatically for MCP process creation.

SECURITY GUARDRAILS

Comprehensive data access controls ensure compliance with Need-to-Know requirements while maintaining operational flexibility and performance. Thorben's SOFA leverages FedRAMP High-compliant services and can be deployed in any AWS region and integrated with AWS operations and log collection best practices.

UNIFIED PLATFORM MANAGEMENT

Build and manage RAGs, AI APIs, and MCP processes from a single platform serving enterprise, projects, and/or systems. Thorben's SOFA leverages AWS Foundational operational services for integration with access control, DevSecOps, FinOps, logging, observability and security, or integrates with the customer's existing AWS Landing Zone.

AI Solutions we offer to run in our Secure Organizational Foundation AI include

- Software Development for SAP ERP

- Software Development for MS Power Apps

- Knowledge Management for SAP ERP

- Data Management for Federal

- Data Management for Commercial

- Help Desk Chatbot for Federal

- Help Desk Chatbot for Commercial

- Acquisition Management for Federal

- Acquisition Management for Commercial

- Service Delivery for Federal

- Service Delivery for Commercial

- Custom Processes for Federal

- Custom Processes for Commercial